About AutoDrive

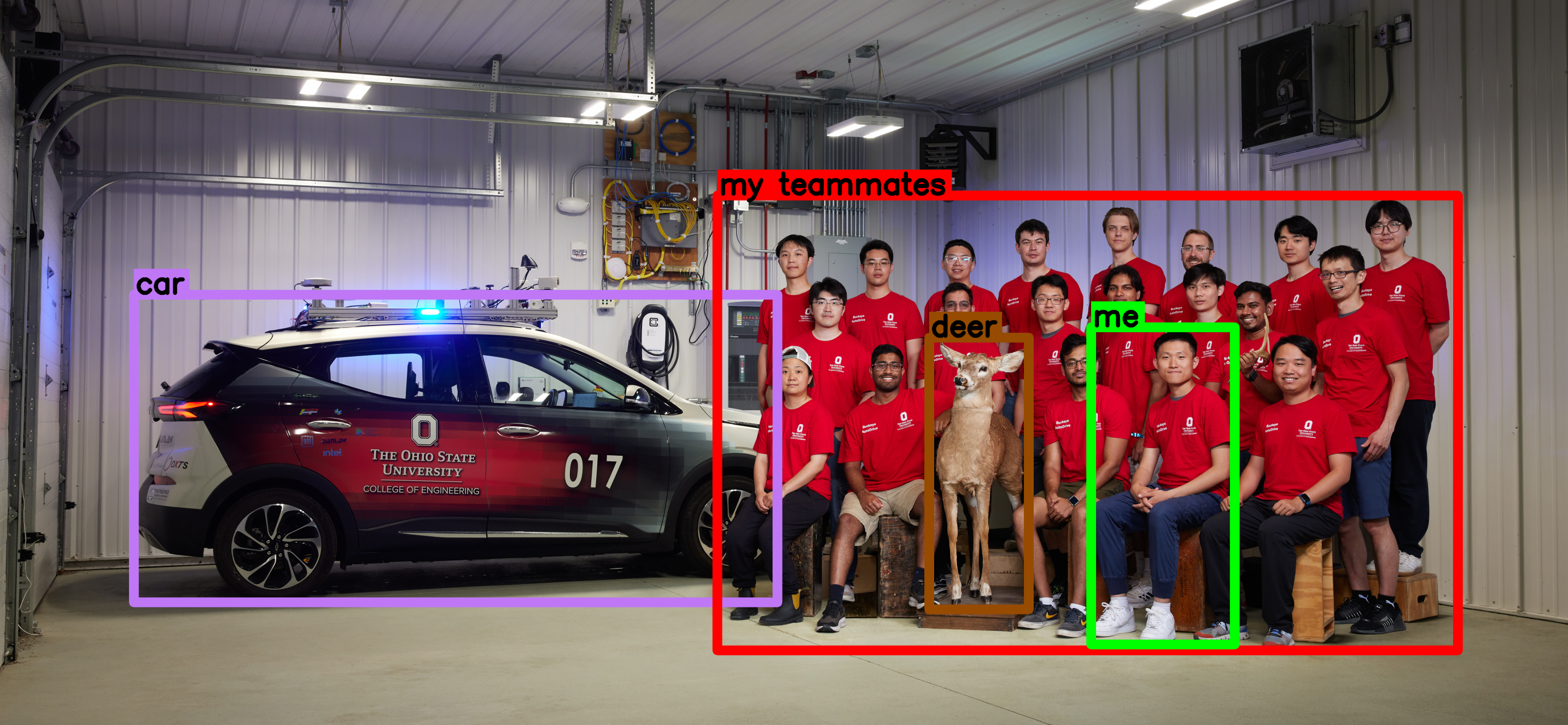

AutoDrive Challenge™ II is a student competition created by SAE in partnership with General Motors (GM). Together with 9 other universities across North America, The Ohio State University's Buckeye AutoDrive aims to develop an autonomous vehicle (AV) that can navigate urban driving courses as described by SAE Standard (J3016™) Level 4 automation. The competition spans the course of 5 years with over 60+ student members, including undergrad, master's and PhD students, all working on various functions from hardware design, sensors, perception, planning/controls, vehicle safety, and vehicle integration.

I was fortunate enough to join the team during year 2 and 3. My main focus was with the perception team taking in data from the sensors, running various computer vision algorithms to understand the environment, and then passing that information onto the planning and controls team to ensure the vehicle can navigate safely. Our perception system was designed in a modular approach incorporating various algorithms such as object detection/classification, depth estimation, object tracking, and dynamics prediction. I started in year 2 on the 3D perception subteam before transitioning to the 3D perception subteam lead. In year 3, I led the overall perception team and was the co-captain for the team.

Year 2 - 3D Perception Subteam Lead

During my first year on the team, I evaluated various 3D object detectors comparing inference speed when ran only on Intel hardware, and their accuracy on our LiDARs provided by Cepton. We ultimately selected PointPillars through its close integration with mmdetection3d and ability to be optimized with OpenVINO to improve inference speed. The model was trained on synthetic data from AIODrive that was generated to be in the scan pattern of our Cepton LiDARs. As the 3D perception subteam lead, I also worked closely with my group to integrate a 3D multi-object tracker model, dynamics prediction, and other post-processing algorithms.

Year 3 - Perception Lead and Team Captain

As perception lead, I led the team to improve our various perception modules to tackle the perception tasks of the challenge to ultimately receive perfect scores for our pedestrian, signs, and stop bar detections. I also reengineered the ROS 2 perception pipeline reducing runtime to 60ms, expanded our pipeline to process inputs from 3 cameras, and developed a centralized visualization tool for all our perception results for easier debugging and validation. As team captain, I worked with other subteam leads to ensure we met specific milestones and I helped coordinate integration and testing to ensure all components connected well during the final competition.